Given a single image of a 3D object, we proposes a novel method (named ConsistNet) that is able to generate multiple images of the same object, as if seen they are captured from different viewpoints, while the 3D (multi-view) consistencies among those multiple generated images are effectively exploited. Central to our method is a multi-view consistency block which enables information exchange across multiple single-view diffusion processes based on the underlying multi-view geometry principles. ConsistNet is an extension to the standard latent diffusion model, and consists of two sub-modules: (a) a view aggregation module that unprojects multi-view features into global 3D volumes and infer consistency, and (b) a ray aggregation module that samples and aggregate 3D consistent features back to each view to enforce consistency. Our approach departs from previous methods in multi-view image generation, in that it can be easily dropped-in pre-trained LDMs without requiring explicit pixel correspondences or depth prediction. Experiments show that our method effectively learns 3D consistency over a frozen Zero123 backbone and can generate 16 surrounding views of the object within 40 seconds on a single A100 GPU.

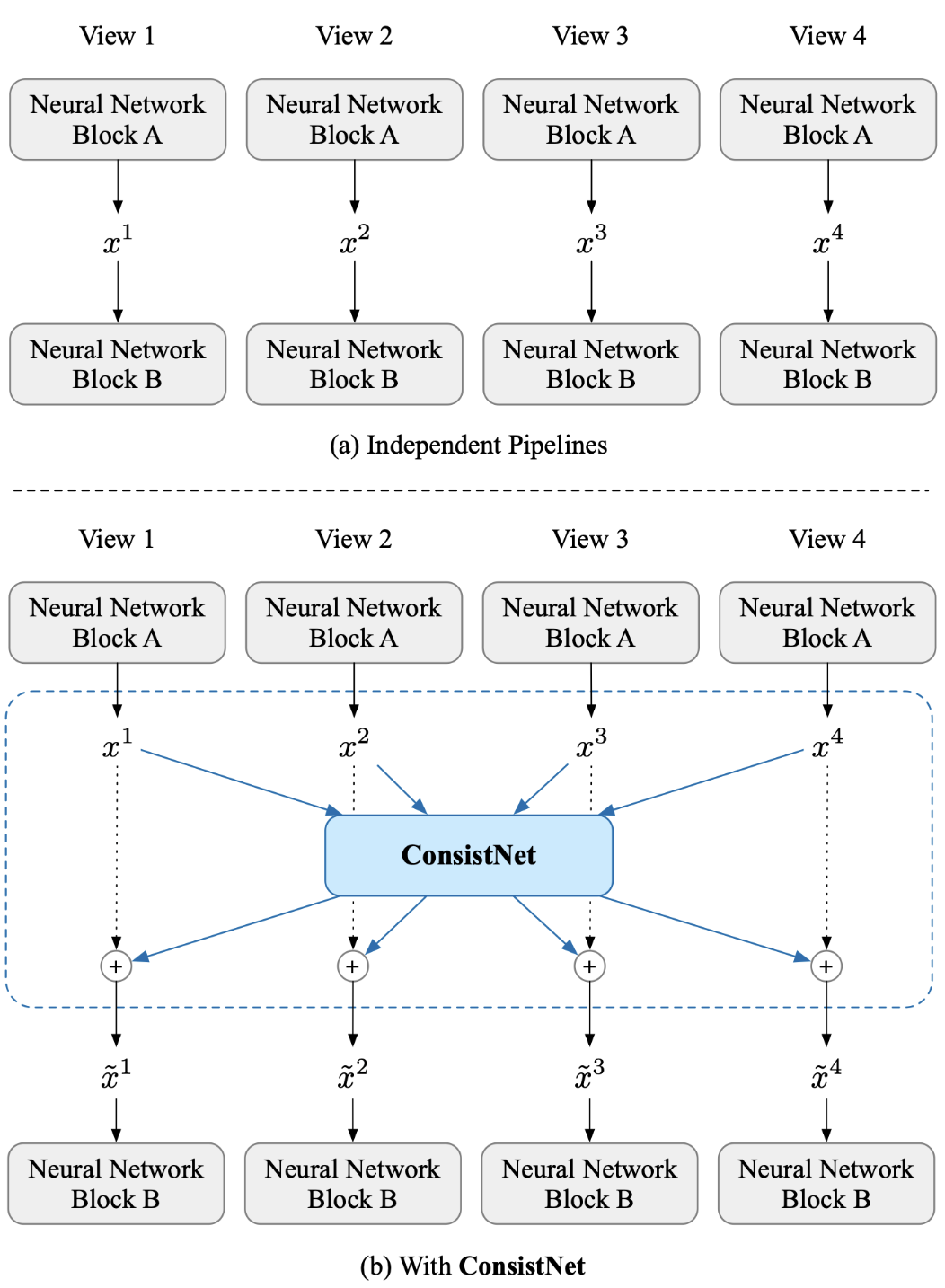

At the core of our method is an add-on block to pre-trained Latent Diffusion Models (LDMs), called ConsistNet, that exchanges information between parallel LDMs running at different viewpoints based on underlying multi-view geometry principles.

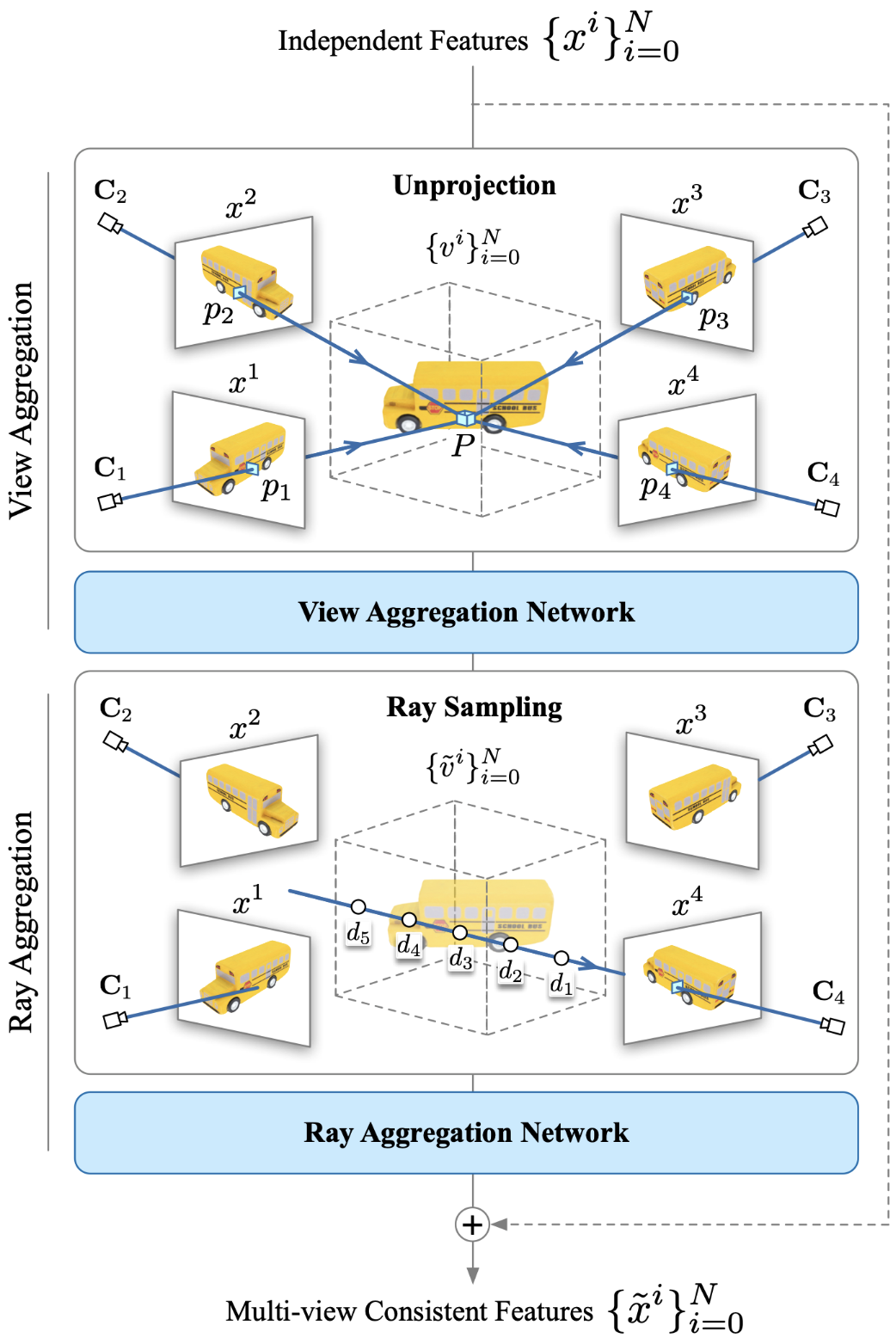

ConsistNet block consists of two sub-modules: (a) a view aggregation module that unprojects multi-view features into global 3D volumes and infer consistency, and (b) a ray aggregation module that samples and aggregate 3D consistent features back to each view to enforce consistency

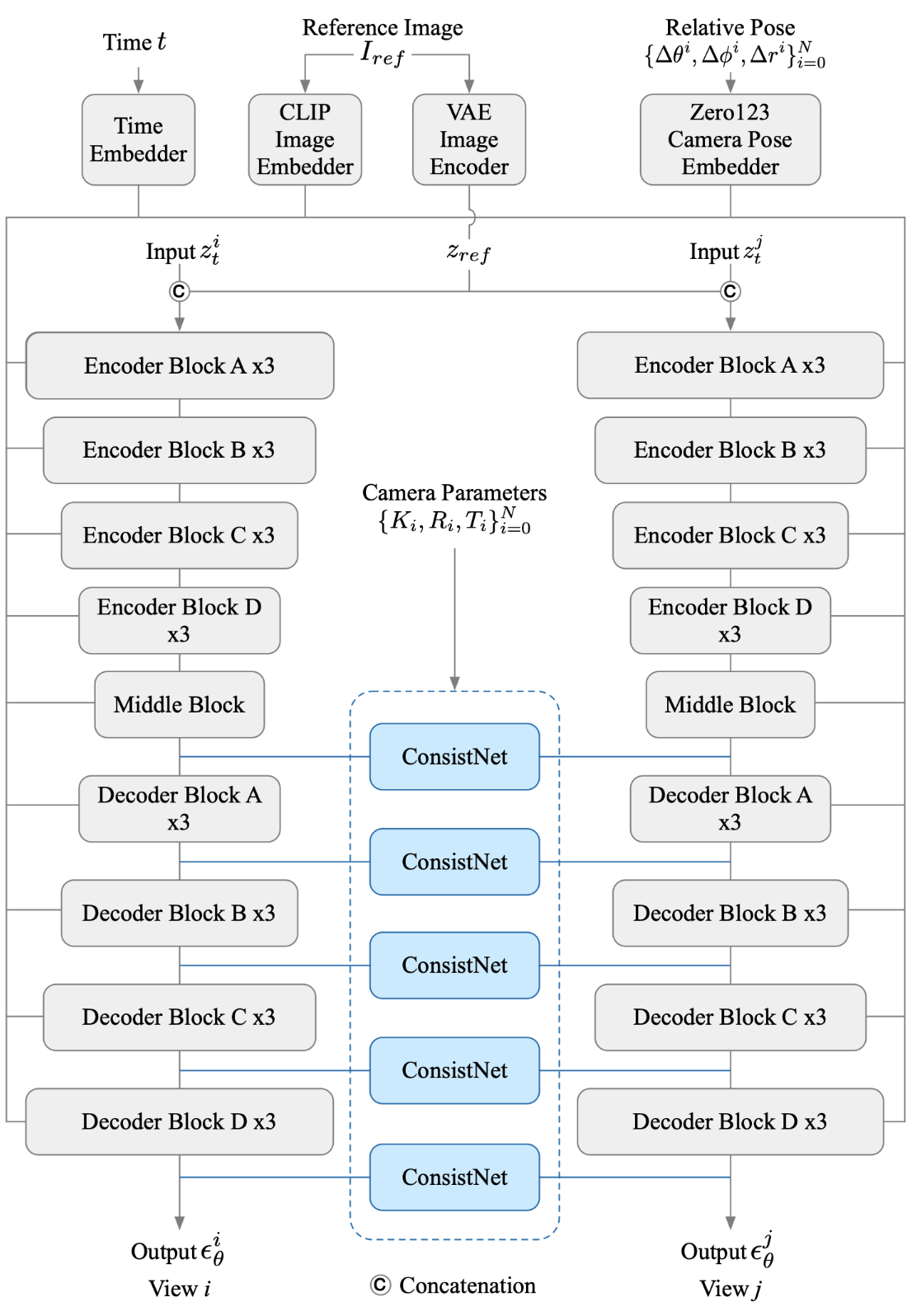

We use Zero123 as an example to showcase how ConsistNet can enforce 3D multi-view consistency in large pretrained LDM models. ConsistNet blocks are plugged into every level of the decoder to enforce 3D consistency. Our ConsistNet is implemented in the HuggingFace Diffusers framework.

@article{yang2023consistnet,

title={ConsistNet: Enforcing 3D Consistency for Multi-view Images Diffusion},

author={Yang, Jiayu and Cheng, Ziang and Duan, Yunfei and Ji, Pan and Li, Hongdong},

journal={arXiv},

year={2023}

}